'Design' Blog Series

Designing CX with AI

AI Ethics and Responsible AI in Customer Experience

TL;DR (Too Long; Didn’t Read)

AI-driven customer interactions are reshaping CX with convenience, scalability, and personalization. However, bias, privacy, and transparency risks demand that brands adopt ethical AI principles to maintain trust, meet regulations, and deliver fair, inclusive experiences.

Why Ethical AI Matters

- Customer Trust: 85% of customers demand clarity on how their data and AI decisions impact outcomes. Missteps in fairness or transparency can erode loyalty.

- Regulatory Compliance: Emerging laws like the EU AI Act and CCPA enforce fairness, privacy, and algorithmic transparency. Proactive alignment avoids fines and reputational damage.

- Competitive Advantage: Ethical AI differentiates brands by reinforcing trust and encouraging voluntary data sharing.

Core Ethical Challenges in AI-Driven CX

- Bias and Discrimination: Historical data biases can amplify inequities in recommendations, pricing, or approvals.

- Privacy and Consent: Customers need control over how their data is used.

- Transparency: Customers want understandable explanations of AI decisions.

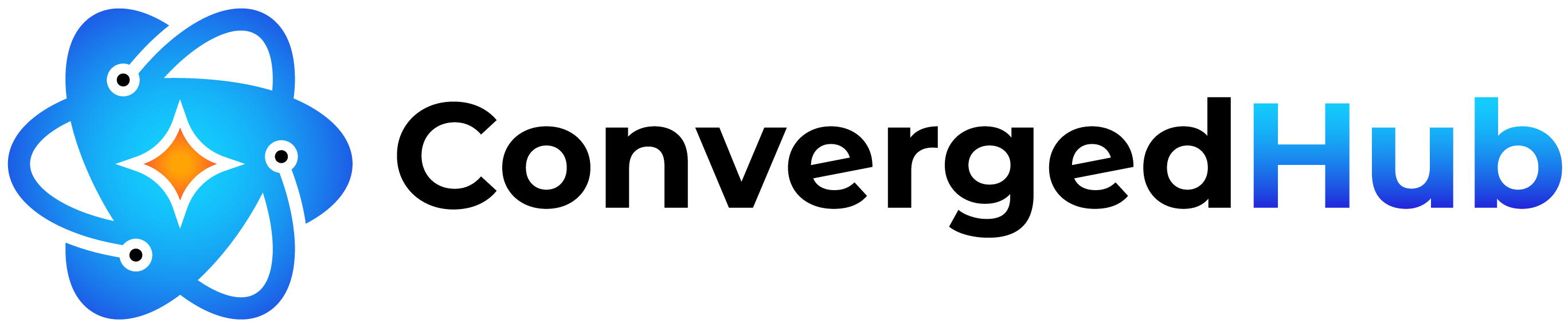

Regulatory Frameworks Shaping Ethical AI

- EU AI Act: A risk-based approach requiring bias testing, transparency, and human oversight for high-risk applications like credit scoring or healthcare.

- U.S. AI Landscape: State-level regulations (e.g., CCPA, CPRA) and the AI Bill of Rights Blueprint emphasize data privacy, transparency, and fairness.

- China’s PIPL: Strict privacy laws and draft AI regulations focus on algorithmic transparency and data localization.

- Global Standards: Countries like Singapore and Canada provide voluntary frameworks focusing on human-centric, fair AI.

Pillars of Responsible AI for CX Leaders

- Fairness: Use diverse datasets, conduct bias audits, and apply fairness techniques.

- Transparency: Add “Why this?” explanations to chatbots and decision tools; document AI logic.

- Privacy: Employ privacy-by-design and secure data handling to protect customer autonomy.

- Accountability: Establish Responsible AI committees, conduct external audits, and train teams in ethics.

- Human Oversight: Implement human-in-the-loop models for sensitive cases to ensure empathy and accuracy.

The Business Case for Ethical AI

- Strategic Differentiation: Ethical brands enjoy stronger loyalty and higher Net Promoter Scores (NPS).

- Risk Reduction: Fair, privacy-compliant AI minimizes legal and reputational risks.

- Operational Efficiency: Reliable AI models reduce complaints and streamline workflows.

- Market Growth: Ethical AI unlocks underserved customer segments, boosting inclusion and revenue.

Action Plan for CX Leaders

- Short-Term: Audit AI systems for bias and privacy gaps; draft an Ethical AI Charter.

- Medium-Term: Integrate explainability tools and enhance team training in Responsible AI practices.

- Long-Term: Treat ethics as a brand differentiator; invest in privacy-preserving techniques and continuous model improvement.

By embedding fairness, transparency, privacy, and accountability into AI systems, CX leaders ensure technology enhances—not undermines—customer trust. Ethical AI is not just a safeguard; it is a strategic lever for innovation, loyalty, and long-term success in a values-driven digital era.

I. Introduction

In recent years, organizations have accelerated their adoption of AI-driven technologies to reshape customer experience (CX). As AI becomes more integrated into every touchpoint—websites, mobile apps, voice assistants, social channels—customer interactions increasingly occur in a world guided by intelligent systems rather than human agents.

This change is not subtle. Customers now engage with AI systems for product research, troubleshooting, and even after-sales support. The overall effect can be a seamless, convenient, and personalized journey—one that scales to millions of interactions without compromising on speed or availability.

However, alongside these efficiencies come deeper questions:

- Can customers trust an automated system with their personal data?

- Are these algorithmic recommendations fair and unbiased, or do they reinforce societal stereotypes and inequalities?

- Is the underlying logic transparent enough to assure customers that the outcomes they receive—from credit decisions to healthcare advice—are equitable and justifiable?

The growth of AI in CX has raised the stakes for ethical responsibility. Regulatory frameworks such as the EU’s AI Act, as well as data protection laws like GDPR and CCPA, reflect a growing consensus that AI must respect individual rights, privacy, and fairness. Customers, too, now demand clarity: Who is making decisions—the machine or a human behind it—and on what basis? Ethical considerations have thus evolved from being a theoretical concern to a strategic imperative for businesses that want to thrive in a trust-driven economy.

Why Ethics and Responsible AI Are Central to CX Strategy

The shift to AI-first customer engagement makes trust more crucial than ever. Here’s why:

- AI solutions can be a powerful differentiator and value creator for customers

- However, ethical missteps can quickly erode customer confidence and loyalty

- A single viral story about AI bias or data misuse can severely damage brand reputation

Responsible AI goes beyond risk management:

- It ensures algorithmic decisions align with organizational values

- Ethical guidelines help prevent discrimination

- Personal data remains secure and transparent

- Customers feel respected and understood

By aligning AI capabilities with ethical standards, businesses build sustainable CX innovation that truly puts customer interests, values, and well-being first.

II. Understanding AI Ethics in the CX Context

Defining Ethical and Responsible AI

Ethical AI refers to the principles and moral standards guiding AI systems’ development and deployment. It ensures that algorithms are designed with fairness, inclusivity, and the respect for human rights in mind. Responsible AI operationalizes these principles. It involves embedding checks and balances into the AI lifecycle (data collection, model training, deployment, and ongoing monitoring) to ensure that the system’s decisions align with the defined ethical standards.

In CX, Ethical AI might set the vision: “Our recommendation engine will not discriminate based on protected characteristics.” Responsible AI is how that vision comes to life: regular bias audits, transparency features for customers, and data governance policies that limit unnecessary collection of personal information.

Ethical Dimensions in Customer Interactions

Three areas are particularly relevant:

- Bias and Discrimination: AI systems learn from historical data. If that data contains biases—favoring certain demographics in product recommendations or offering better terms to select groups—these biases can be perpetuated or even amplified by the algorithm. Addressing this requires careful data curation, algorithmic fairness techniques, and ongoing reviews to ensure all customers receive equitable treatment.

- Privacy and Consent: Customers share personal details, from browsing history to location data, often unknowingly. Responsible organizations explain how, why, and where data is used. Providing opt-in/opt-out controls, clear consent forms, and privacy-by-design protocols assures customers that their data rights are respected.

- Explainability and Transparency: Customers increasingly demand to understand how and why AI systems arrived at their conclusions. Whether it’s a loan recommendation or a product suggestion, simple, human-readable explanations of the logic behind each decision foster trust and credibility.

Examples in Action

- Bias Mitigation: A financial institution discovered that its loan recommendation chatbot showed skewed approvals toward certain ZIP codes correlating with higher income. By retraining models with more diverse data and applying fairness metrics, the bank improved equity in approvals. (Future Platforms on AI Bias & Transparency)

- Privacy and Consent: A telecommunications provider found that when it clearly informed customers about how their data would be used to personalize offers, most users willingly opted in. Customers felt empowered by understanding the value exchange and the brand’s honesty.

- Explainability: A healthcare insurer integrated a “Why this recommendation?” button into its symptom-checking chatbot. Customers who understood the rationale behind health advice reported higher trust and satisfaction.

By integrating ethical and responsible AI principles into CX operations, organizations transform abstract moral duties into tangible action steps that strengthen trust, loyalty, and brand reputation.

III. Navigating Compliance and Regulatory Frameworks

The Evolving Global Regulatory Landscape

As AI capabilities advance, governments worldwide are introducing and refining regulations to ensure technology respects human rights, privacy, and fairness. These laws increasingly shape the AI-driven CX environment, influencing how businesses collect data, design algorithms, and communicate outcomes. Understanding these frameworks helps leaders anticipate compliance requirements, reduce legal risks, and meet customer expectations.

1. European Union: The Proposed EU AI Act

The EU AI Act takes a risk-based approach, categorizing AI systems by their potential harm. High-risk applications—such as credit scoring, employee management, and certain healthcare and financial services—will face stringent requirements. This includes mandatory testing for bias, transparent documentation of decision processes, and human oversight mechanisms.

What It Means for Businesses:

Companies operating or serving customers in the EU must ensure their CX-related AI systems comply with these standards. For instance, an AI-powered insurance quote tool may need rigorous fairness and explainability assessments. Proactive alignment with the AI Act can prevent costly retrofitting of systems later and reassure customers that fairness and safety are top priorities.

2. United States: Blueprint for an AI Bill of Rights & State-Level Initiatives

At the federal level, the White House’s Blueprint for an AI Bill of Rights outlines principles rather than enforceable laws. It emphasizes algorithmic fairness, data privacy, and transparency. While no comprehensive federal AI law exists yet, this blueprint influences regulatory directions and consumer expectations.

Meanwhile, states like California have enacted robust privacy laws (e.g., CCPA and CPRA), impacting data handling practices. Colorado, Virginia, and Connecticut have introduced their own privacy regulations, reinforcing that data governance and transparency are increasingly mandatory.

What It Means for Businesses:

U.S. companies must monitor a patchwork of state-level privacy laws and align AI-driven CX with principles that protect customers from discrimination and ensure data security. Compliance with state regulations sets a solid foundation should future federal AI laws emerge. It also signals to customers that the brand values their rights and autonomy.

3. China: Personal Information Protection Law (PIPL) and Draft AI Regulations

China’s PIPL, akin to the EU’s GDPR, governs the collection, storage, and transfer of personal data. Draft guidelines for AI indicate a focus on algorithmic transparency and avoiding discriminatory outcomes. For businesses operating in or serving Chinese consumers, these regulations demand careful data localization, privacy-by-design architectures, and regular algorithmic checks.

What It Means for Businesses:

Brands must treat Chinese customer data with strict confidentiality, ensure minimal data collection, and anticipate emerging requirements for explaining algorithmic decisions. Aligning with PIPL and upcoming AI regulations solidifies trust among a massive consumer base.

4. United Kingdom: AI Governance and ICO Guidance

The UK’s Information Commissioner’s Office (ICO) provides guidance on AI, focusing on data protection, fairness, and transparency. While the UK has yet to pass AI-specific legislation like the EU’s AI Act, it adheres closely to GDPR principles and encourages best practices through ICO guidelines.

What It Means for Businesses:

Companies operating in the UK should ensure GDPR-level privacy compliance and keep an eye on evolving guidance. Demonstrating fairness, explainability, and non-discrimination in AI-driven CX builds credibility and resilience against future regulatory changes.

5. Singapore, Canada, and Other Jurisdictions

Countries like Singapore have introduced their Model AI Governance Framework, providing voluntary guidelines for responsible AI deployment focusing on transparency, fairness, and human-centricity. Canada’s proposed Artificial Intelligence and Data Act (AIDA) aims to regulate high-impact AI systems, ensuring they are transparent and subject to human oversight.

What It Means for Businesses:

Operating in multiple jurisdictions necessitates a flexible compliance approach. Businesses must harmonize global best practices—fairness audits, privacy dashboards, explainability features—so they can adapt to specific local standards. Embracing these principles universally not only simplifies compliance but also enhances brand reputation internationally.

Key Themes and Business Implications

Across these diverse regulatory landscapes, common threads emerge: a drive for greater fairness, transparency, and accountability; stronger data rights and privacy protections; and the need for human oversight. For businesses, this means:

- Proactive Compliance: Aligning AI-driven CX strategies with emerging standards before they are fully codified reduces future legal risks and complexity.

- Global Harmonization: Multinational brands must implement versatile frameworks that meet the most stringent regulations they face, ensuring consistency and efficiency.

- Customer Trust as a Currency: Regulatory compliance is not just a legal obligation—it’s a competitive advantage. Demonstrating adherence to ethical and responsible AI principles differentiates a brand and reassures customers that their interests come first.

By understanding and anticipating these regulatory shifts, CX leaders can build AI systems that are not only compliant but also customer-friendly, transparent, and fair. This proactive approach fosters long-term trust and positions the organization as a responsible innovator in a rapidly evolving global marketplace.

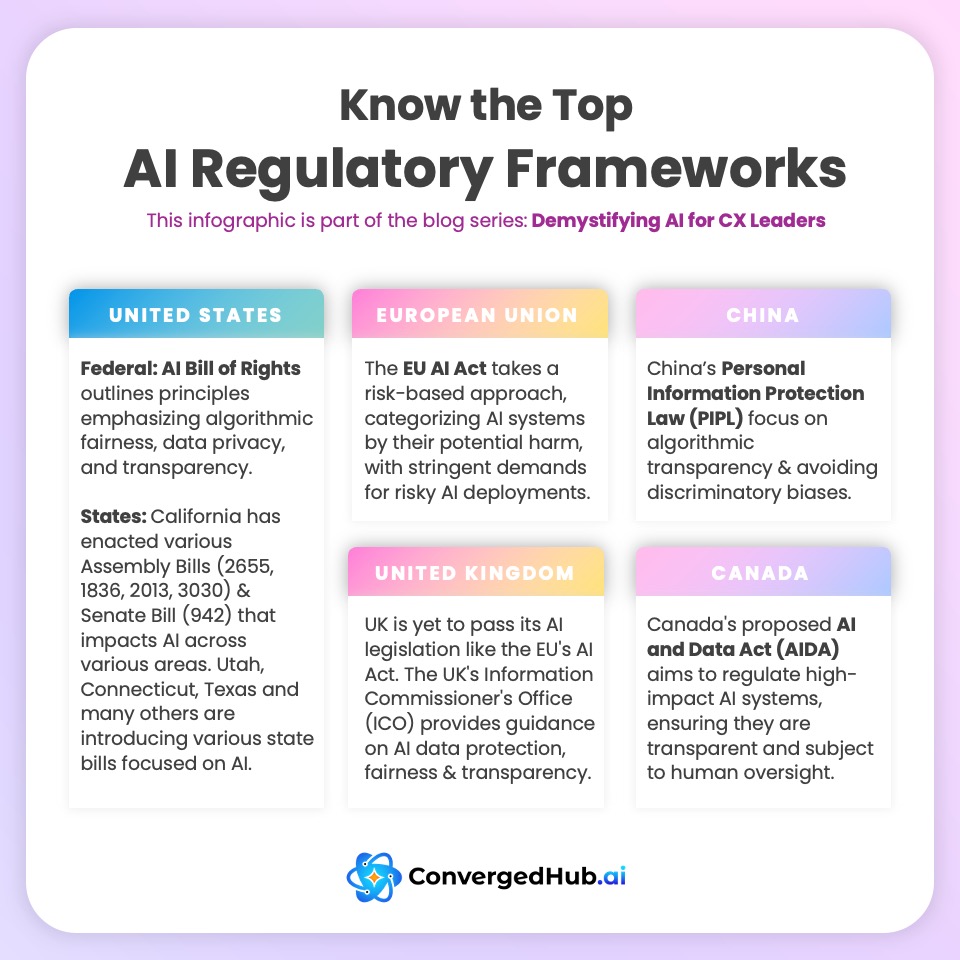

IV. Core Pillars of Ethical and Responsible AI

To operationalize ethics in AI-driven CX, organizations can focus on five foundational pillars. Each pillar supports a responsible approach that respects customers, builds trust, and leads to measurable business benefits.

1. Fairness and Non-Discrimination

Objective: Ensure that no customer segment is systematically disadvantaged.

Key Tactics:

- Bias Audits: Routinely test models for demographic imbalances in recommendations, pricing, or risk assessments.

- Diverse Datasets: Incorporate training data from broad, representative samples. For instance, a global e-commerce platform can source product feedback from varied regions, ensuring that recommendations cater to different cultural preferences.

- Algorithmic Fairness Techniques: Deploy fairness constraints—mathematical methods that adjust model parameters to reduce disparate outcomes.

Result: By actively removing bias, organizations create inclusive customer journeys. This not only aligns with ethical principles but also expands the customer base, since previously underserved groups receive equitable consideration.

2. Transparency and Explainability

Objective: Help customers and stakeholders understand how AI-driven outcomes are determined.

Key Tactics:

- Explainable AI (XAI) Tools: Implement tools that break down complex model decisions into understandable terms.

- Decision Flow Documentation: Maintain an internal record of data inputs, model logic, and outputs. Make summaries available to customers seeking clarity on why they received a particular offer.

- User-Friendly Interfaces: Add “Why this?” buttons and short textual explanations in chatbots or recommendation engines.

Result: Transparency demystifies AI. When customers can see the reasoning behind a product suggestion or a support resolution, they feel respected and informed. This translates into improved trust, reduced disputes, and higher engagement.

Tools such as ConvergedHub.ai empowers businesses to bring significant transparency with its AI design.

3. Privacy and Data Protection

Objective: Protect customer data rights and build confidence in data handling.

Key Tactics:

- Privacy-by-Design: Integrate data minimization, encryption, and access controls into the system’s architecture from the start.

- Clear Consent Management: Provide transparent opt-in/opt-out workflows, ensuring customers have autonomy over their information.

- Secure Storage and Transfers: Comply with local data sovereignty laws, using region-based data centers and robust security protocols.

Result: Customers who trust an organization’s data practices are more willing to share information, which in turn improves personalization. Privacy protection thus becomes a trust multiplier.

4. Accountability and Governance

Objective: Establish clear oversight, ensuring AI aligns with corporate values and regulatory requirements.

Key Tactics:

- Responsible AI Committees: Form cross-functional teams—CX leaders, data scientists, compliance officers, ethicists—to review AI deployments regularly.

- Ethical AI Charters and Policies: Document principles and procedures governing AI use. Consider frameworks like IEEE’s Ethically Aligned Design or ISO/IEC guidelines as references.

- Third-Party Audits: Engage external evaluators to assess compliance, fairness, and performance, lending credibility to public claims of responsible AI use.

Result: Accountability mechanisms promote a culture of responsibility. Employees feel empowered to report ethical concerns, and the organization can swiftly correct course if misalignments emerge.

5. Human Oversight and Intervention

Objective: Complement AI efficiency with human judgment and empathy.

Key Tactics:

- Human-in-the-Loop Models: Allow human agents to review or override AI decisions in complex or sensitive cases.

- Feedback Loops: Encourage frontline staff to flag recurring issues and patterns, feeding insights back into model improvements.

- Hybrid Customer Journeys: Blend automated and human touchpoints. A chatbot can handle FAQs, but a human expert can step in for nuanced queries.

Result: Human oversight ensures that AI-driven CX does not become impersonal or rigid. It also helps identify subtle ethical risks and continually refine the system’s behavior.

Integration of Solutions into CX Strategy

Ethical challenges rarely occur in isolation. Addressing bias, privacy, and transparency together requires holistic governance. By blending technical tools (bias detection, explainable AI software) with organizational interventions (Responsible AI committees, ethics training, vendor evaluation criteria), companies create an ecosystem where responsible AI flourishes.

When effectively implemented, these pillars create a robust ethical framework. Organizations that commit to fairness, transparency, privacy, accountability, and human oversight can deliver CX experiences that resonate deeply with customers’ moral and emotional expectations—ultimately leading to stronger brand loyalty and sustainable success.

V. The Business Case for Ethical AI in CX

1. Beyond Compliance: Strategic Differentiation

Ethical AI is more than an obligation—it is a strategic opportunity. In an era where customers can easily switch providers, brands that go the extra mile to demonstrate fairness, transparency, and respect for data rights stand out. As highlighted by Zendesk, when customers sense ethical practice, they respond with stronger loyalty and improved Net Promoter Scores (NPS).

2. Operational Efficiency and Reduced Risks

Ethical AI drives tangible operational benefits. Models refined to avoid biases produce more reliable outcomes, reducing customer complaints and support escalations. Clear data usage policies prevent misunderstandings that lead to costly legal disputes. By proactively addressing biases and privacy concerns, businesses minimize reputational and regulatory risks—allowing CX teams to focus on innovation rather than crisis management.

3. Aligning with Corporate Values and Culture

For organizations that emphasize values like inclusivity, fairness, and human-centric service, ethical AI offers a means to embody these principles. Internally, employees are more engaged when they see that their work contributes positively to society. Externally, customers who share these values appreciate interacting with a brand that “walks the talk.”

4. Financial Upsides of Trust and Loyalty

A PwC report noted that most consumers prefer ethically accountable companies. Beyond loyalty and retention, responsible AI can unlock new customer segments traditionally underserved due to biases. Improved trust and broadened market appeal ultimately translate into revenue growth and competitive resilience.

In essence, ethical AI acts as a foundation for long-term strategic advantage. By marrying moral responsibility with operational rigor, organizations can harness the transformative power of AI while maintaining the trust that underpins lasting customer relationships.

VI. Frameworks and Governance Structures for Responsible AI

1. Responsible AI Committees and Cross-Functional Teams

A well-structured governance framework ensures that ethical considerations are not overlooked. Responsible AI committees bring together CX leaders, data scientists, compliance officers, and ethicists. They review AI projects, set guidelines, and enforce standards. By having decision-makers from different backgrounds, organizations avoid tunnel vision and develop more holistic solutions.

Example: Microsoft’s Office of Responsible AI demonstrates that centralized oversight can foster a culture where ethics and innovation co-exist seamlessly.

2. Internal Policies and External Frameworks

Companies can look to established guidelines like the IEEE Ethically Aligned Design or ISO/IEC standards to shape internal policies. Salesforce’s Ethical AI Guidelines or Microsoft’s Responsible AI Standard provide tangible templates. Incorporating these frameworks into internal charters, training materials, and performance metrics ensures consistent application of principles.

3. Continuous Monitoring and Auditing

Ethical AI is a living practice. Regular audits help detect drifting biases, changes in data quality, or new vulnerabilities. Using audit trails, organizations can trace AI decisions and demonstrate compliance during external reviews. Tools like IBM Watson OpenScale or Google’s Fairness Indicators streamline this monitoring process.

4. Employee Training and Cultural Shift

Empowering employees to recognize and address ethical concerns is essential. Scenario-based training workshops, role rotation programs (where data scientists learn about CX challenges and vice versa), and open-door policies for reporting potential issues encourage a proactive stance. A workforce that appreciates the moral dimensions of AI can spot ethical risks early.

5. Leveraging Third-Party Tools and Certifications

Third-party certifications and audits lend credibility. Engaging independent assessors to validate fairness, data protection, or explainability claims adds an extra layer of assurance. As standards mature, certifications for AI ethics may become as integral as ISO certifications for quality management.

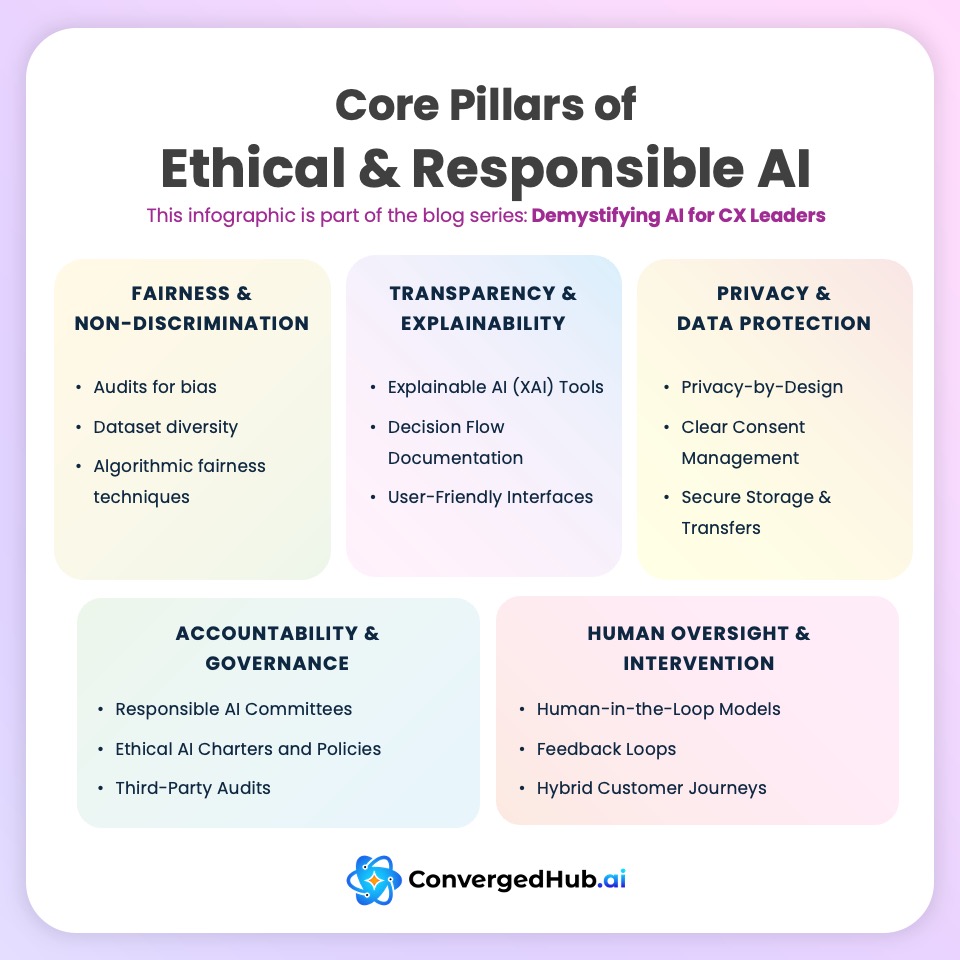

VII. Actionable Steps for CX Leaders

Implementing ethical AI in CX need not be overwhelming. By starting small and scaling strategically, leaders can gradually build an environment where responsible AI thrives.

Immediate Actions

- AI Ethics Audit: Identify existing AI applications in CX and evaluate them for potential biases, privacy gaps, or opaque decision-making.

- Publish an Ethical AI Charter: Draft a concise document outlining the organization’s commitments to fairness, transparency, privacy, and accountability. Share it internally and externally.

- High-Risk Use Cases First: Prioritize areas where AI decisions have significant impacts on customer outcomes—such as financial approvals or healthcare recommendations—and apply stricter ethical standards there.

Medium-Term Initiatives

- Integrate Explainability Features: Add “Why this?” buttons and understandable explanations in chatbots, recommendation systems, or pricing models.

- Develop Transparent Model Documentation: Keep thorough records of data sources, model parameters, training methods, and decision logic. Make summaries accessible to customers and regulators.

- Upskill Teams on Ethical AI: Train data scientists on bias detection techniques, CX agents on explaining AI outcomes, and leaders on strategic risk management. Consider scenario-based workshops or certified courses.

Long-Term Vision

- Bake Ethics into Corporate Strategy: Treat ethical AI as a continuous improvement area. Regularly revisit policies, retrain models, and update governance structures as technology and regulations evolve.

- Use Ethics as a Brand Differentiator: Highlight fairness, privacy, and transparency commitments in marketing campaigns. Customers increasingly gravitate toward brands that demonstrate moral responsibility.

- Innovate with Privacy-Preserving Techniques: Explore advanced methods like federated learning, differential privacy, or synthetic data to protect customer information and comply with stringent data sovereignty laws.

By following these steps, CX leaders set a trajectory toward responsible AI maturity. Over time, ethical considerations become ingrained in the organizational culture, ensuring that as AI capabilities grow, so does the brand’s commitment to doing right by its customers.

VIII. Real-World Case Studies

A. Amazon’s Recruiting Algorithm (Cautionary Tale)

- Scenario: Amazon built an AI recruitment tool that favored male candidates due to biases in historical hiring data. While not directly CX-related, it illustrates how unaddressed bias can undermine trust and fairness.

- CX Takeaway: The same principle applies to AI-driven CX tools. If chatbots or recommendation engines rely on biased data, outcomes will be skewed. Regular audits and diverse training sets are essential.

Reference: Reuters: Amazon scraps secret AI recruiting tool that showed bias against women

B. Microsoft’s Responsible AI Journey

- Scenario: Microsoft formed an Office of Responsible AI and introduced the Responsible AI Standard, guiding product teams to embed fairness, privacy, and accountability into their solutions.

- CX Takeaway: CX leaders using Microsoft’s AI-driven tools can rely on these embedded safeguards for voice analytics, chatbots, and personalization features that align with ethical standards.

C. Vodafone’s Ethical Chatbot Deployment

- Scenario: Vodafone’s TOBi chatbot was continually reviewed for biased responses and privacy issues. Ongoing refinements led to fairer, more accurate interactions, improving NPS and reducing complaints.

- CX Takeaway: Continuous oversight and iterative improvements ensure AI-powered assistants earn customer trust over time.

D. Salesforce’s Ethical Use of AI in CRM

- Scenario: Salesforce’s Einstein AI applies guidelines for ethical data use, explainability, and bias detection. Users can deploy AI-driven CRM features—such as lead scoring or product recommendations—knowing they meet ethical benchmarks.

- CX Takeaway: Partnering with vendors that prioritize ethical AI simplifies compliance and builds a strong foundation for trustworthy CX innovation.

These case studies underscore the universal applicability of ethical and responsible AI principles. Whether it’s recruitment, CRM, or telecom support, embedding ethics into AI-driven processes safeguards brand integrity and fosters trust.

IX. Conclusion: Key Takeaways for CX Leaders

As organizations harness AI to transform customer experience, the ethical dimensions of these technologies cannot be overlooked. Customers expect more than efficiency—they want fairness, transparency, privacy, and accountability. Regulations will continue to tighten, and reputational risks associated with AI misuse will not diminish.

Core Insights:

- Ethical AI as a Trust Enabler: By proactively addressing bias, privacy, and explainability, brands strengthen the bond with customers. Trust becomes a cornerstone of CX, encouraging customers to share data willingly and embrace AI-driven innovations.

- Transparency as a Market Differentiator: Clear explanations and disclosure build confidence. In a crowded marketplace, brands that openly communicate their ethical commitments set themselves apart.

- Governance and Accountability: Robust frameworks—committees, audits, policies—ensure that ethics is not left to chance. A structured approach keeps organizations ahead of regulatory curves and customer expectations.

- Long-Term Strategic Value: Ethical AI practices minimize reputational damage, reduce operational frictions, and open doors to underserved markets. Over time, these principles become integral to a sustainable competitive advantage.

Most importantly, ethical AI ensures that the human element remains at the center of customer experience. As algorithms become more sophisticated, the guiding values of fairness, respect, and integrity ensure that technology serves, rather than subverts, human interests. By committing to ethical and responsible AI, CX leaders pave the way for innovation that inspires trust and loyalty—securing a place for their brands in an evolving, value-driven digital landscape.